In a recent redesign of the PutYourLightsOn website, we decided to start with a blank slate. It became an excellent opportunity to re-evaluate the state of web publishing tools in 2018 with the aim of utilising the best possible means to build a lightning-fast site that would still enable a great authoring experience.

It is no secret that I love working with Craft CMS and it of course was my first choice in terms of authoring experience. Yet I am also well aware that sites powered by Craft can sometimes feel a bit sluggish if set up inefficiently. So the first option was to build an optimised and lightweight Craft site to get the most performance out of it.

Option 1 #

Build an optimised and lightweight Craft site to get the most performance out of it.

Google’s PageSpeed Insights triggers an “Improve Server Response Time” alert if your server response time is above 200 milliseconds. TTFB (time to first byte) is the time it takes for the web server to respond with the first byte of data to a request for a web page and is an important metric in web performance.

When a web server receives a request for a web page, one of two things generally happens. The first is that the server returns data or a file from system memory or from the file system, for example when a request comes in for a page that has been previously processed and stored in memory, or for an image or another resource file. The second is that the request is passed on to the web server to process and do whatever it needs to do before returning a result.

Craft CMS is written in PHP, a programming language that generally runs on an Apache or Nginx web server. When the web server receives a request for a page that is managed by Craft, a PHP execution thread handles the bootstrapping of the web app and a chain of events is started in order to determine how exactly the request should be processed. At the end of that chain of events, a response is sent back to the user’s web browser, and the time it takes for the first byte to complete that round trip is the TTFB.

Obviously a web page may need to do a bunch of processing to determine what to return to the web browser, so sometimes that trip to the Craft application is unavoidable and potentially slow (upwards of 600 ms). But it makes very little sense to process every request to the same page over and over again, especially if the result is always going to be the same.

Imagine you worked at a bakery that sold delicious home-made scones, the best in town. On a busy day, you run out of scones and have to tell each customer that comes in that unfortunately there are none left. You’d like to bake some more but dealing with all the customers is consuming all of your time, so you put up a sign on the outside of the bakery: “Sold out of scones”. That frees you up to bake some more and when the new batch of scones is ready you simply change the sign: “Freshly baked scones!”.

The analogy above is similar to a web server firing up a PHP process and running Craft on each and every request to a page that determines whether there are scones or not. That process may be a very involved one, but once it is determined it does not need to be reprocessed until something has changed within the system, i.e. the scones have sold out or a fresh batch has come out of the oven.

Now imagine an “about” page on your website that outputs some text about your company. Even though that’s all it does, the web server will still by default process each and every request that comes in for that page and always return the same result. One simple and effective way to avoid this is to use static file caching. Statically caching a web page means storing the result of the request somewhere and returning that cached data to any request that comes in, without doing any processing. This results in huge performance gains — responses are sent almost immediately and with little or no processing power involved.

So the second option was to use a server-side static file caching tool such as Varnish for Apache or FastCGI for Nginx to speed up response times and increase potential requests per second.

Option 2 #

Use a server-side static file caching tool such as Varnish for Apache or FastCGI for Nginx to speed up response times and increase possible requests per second.

When I looked into the details of setting up server-side static file caching, I was surprised to find how much setup is required (this article provides an excellent, in-depth guide). While not overly complex, it was quite a bit more involved than I had expected and the thought of having to do this for multiple sites hosted on multiple servers was off-putting. Testing locally and on staging servers also meant potentially repeating the process multiple times per site.

The other red flag was cache invalidation. When any content changes in your CMS, you ideally only want the affected cached pages to be invalidated (also known as cleared or busted), however the generally used approach is to invalidate the entire cache. That means that making a change to any field on the “about” page would result in an entire website’s cache — potentially hundreds or thousands of pages — being invalidated because of a change to only a single page.

“There must be a simpler and better way to do this”, I thought, and set out to build a Craft plugin that enables static file caching with minimal setup and with intelligent cache invalidation.

Option 3 (the winner) #

Build a Craft plugin that enables static file caching with minimal setup and with intelligent cache invalidation.

In the early planning stages I was reminded of a well-known quote that is attributed to Phil Karlton:

There are only two hard things in Computer Science: cache invalidation and naming things.

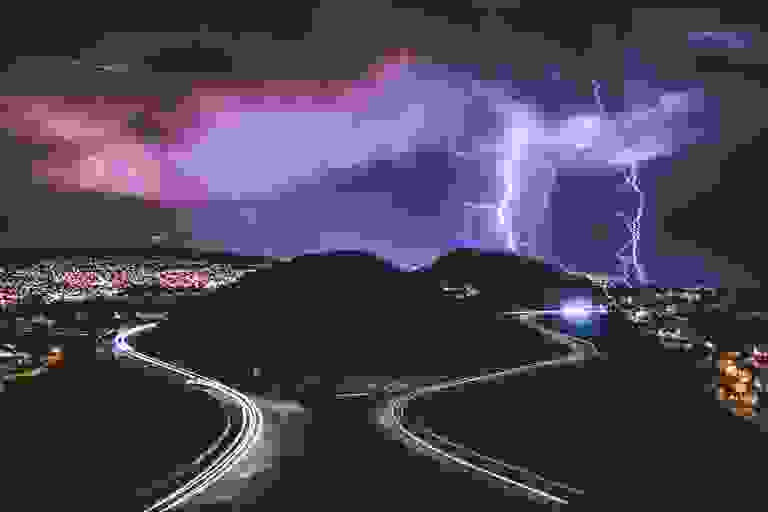

Naming the thing turned out to be the easier of the two. “Blitz”, meaning lightning in German, was a tribute to the official Craft CMS conference, DotAll, coming to Berlin in 2018. Cache invalidation, however, was a much deeper rabbit hole than I had expected with lots of lessons learned along the way.

Today, installing and setting up the Blitz plugin takes less than a minute and one-click updates are available through the official Craft plugin store. The performance gains are comparable to server-side static file caching with none of the headache.

As noted above, cache invalidation, done right, is hard! Yet the Blitz plugin has an unfair advantage over the server-side static file caching options in that it is intimately connected with the CMS. It uses native events in Craft to determine exactly which pages load which elements (entries, categories, users, etc.). It even goes a step further and determines which pages could possibly load which elements, so for example a page that loads the 10 most recently published articles will be invalidated if a completely new article is published.

Cache warming is another technique used by Blitz. This means that each time a cached page is invalidated, Blitz will automatically regenerate the cache with the latest changes, meaning that there will never be a case where some unlucky user lands on an invalidated (and hence uncached) page and has to wait several seconds for it to load.

The Blitz plugin has become a mainstay plugin on all the sites we build. It supports multi-site setups in Craft and often results in a TTFB of 100 ms or less, which makes a site feel extremely zippy and is sure to satisfy Google. It’s hard to imagine going back to not using some method of static file caching and Blitz truly enables lightning fast sites with a great authoring experience — the best of both worlds.

Blitz is available in the Craft Plugin Store and comes with a free trial so you can see just how much of a speed and performance boost it can give your site.