It’s not easy to admit this, but I’ve been doing testing wrong for the past 20 years. A recent revelation allowed me to cut through the noise (and the bullshit) and come out the other side feeling confident that my new approach to automated testing is finally aligned with my goals for it.

You’re doing it wrong #

There’s something about automated testing, or at least how people on the Internet talk about it, that has always made me feel like I’m doing something wrong. The dialogue tends to go something like this:

Me: I’m struggling to figure out how to write my tests. I know what I want to test, but how do I translate that into an automated test suite?

Someone on the Internet: Write unit tests that test the smallest unit of logic possible, at most a single function.

Me: What about functions that have dependencies?

Someone on the Internet: Write your functions using dependency injection so it is easier to test. Then you can mock the dependencies.

Me: What about when functions need to read and write to and from the database?

Someone on the Internet: Your unit tests should be able to be executed in isolation without a database.

Me: But most of what I want to test requires that my app works within the context of a broader system.

Someone on the Internet: It sounds like your app has too many side effects.

Me: Yes, it has lots of side effects, which is how it provides value to its users.

Someone on the Internet: You’re doing it wrong.

I tend to come away from such dialogues, regardless of whether it was me or someone like me asking those questions, feeling inadequate.

And yet I’ve done plenty of research on the topic. Almost all the literature (and tutorials) I’ve found focus on the mechanics of testing, rather than the goals. There is a major lack of literature out there that explains the how of testing.

Here are the commonly preached testing “best practices” that I see:

- Unit tests should test small units of logic and should be executable in isolation.

- If you need to use a database or other dependencies in your tests, you should use mocks.

- Your tests should run in as little time as possible.

- If you manage to achieve 100% code coverage, then you’re doing it right.

The real problem #

The real problem with these so-called best practices is that they aim primarily for speed and code coverage. Having fast tests that cover your entire codebase is a sensible thing to aim for, but it should not be the primary goal of a test suite.

The reason for testing software is to ensure that the features it delivers work!

I’ve come to realise that writing low-level unit tests that, more often than not, test the correctness of the implementation of features rather than the features themselves, is a waste of time. They don’t deliver on the promise of automated testing. On the contrary, they often result a false sense of security.

Achieving 100% code coverage doesn’t guarantee that your application’s features work as expected, that they are bug-free, nor that they are resilient to edge-cases. All it proves is that you’ve written code that tests other code – not necessarily valid tests.

To illustrate the point, here’s a function that returns true if the provided username is available and is not a reserved username.

function isUsernameAvailable(string $username): bool

{

$user = getUserByUsername($username);

$reservedUsernames = ['admin', 'test'];

return $user === null && !in_array($username, $reservedUsernames);

}

And here’s a test that results in 100% code coverage by testing the function using a randomly generated username.

test('returns true if the username is available', function () {

$username = (FakerFactory::create())->userName;

expect(isUsernameAvailable($username))->toBeTrue();

});

But notice how this test is insufficient. It tests the function, but not the feature! To make this “feature complete”, we need an additional test that ensures that a reserved username is unavailable.

test('returns false if the username is reserved', function () {

$username = 'admin';

expect(isUsernameAvailable($username))->toBeFalse();

});

Of course, anyone can write poor tests (I’m living proof!). The point, however, is that code coverage alone is a meaningless metric.

Here’s how PHPUnit, one of the most popular testing frameworks for PHP, recommends organising tests.

Notice how the classes in the tests/unit directory mirror the classes (and the structure) of the application in the src directory. This understandably makes it easier to test the implementation of the code and ensure code coverage.

And herein lies the trap.

Testing is hard, so the tendency is to follow approaches that make it easier. But doing so can result in unaligned goals.

Remember, the goal of your tests is to ensure that your app’s features work as expected. The screenshot above shows one (only one!) integration test, aptly named PuttingItTogetherTest. As if the application classes working together is of little significance. Oh, the humility!

But, alas, this is how I had been organising my test suites for the past 20 years of software development, never feeling fully satisfied that my test suites were aligned with my goals of testing.

I threw out the blueprint I’d been using and started with a blank slate.

Why and what to test #

The main reason for wanting to test is release confidence (the confidence to release new features) and reliability (the assurance that the features work as expected).

Tests should be based on their purpose, not their mechanics!

Having seen the light, here is how I’ve started defining my tests.

Feature Tests #

Feature tests test the functionality of the application. Since most applications contain multiple features, feature tests should make up most of the automated tests.

Feature tests can be thought of as internal documentation about how the application functions. They should be strategic, self-documenting, demonstrate thoroughness, and help avoid bugs.

Some examples of feature tests in one of my applications are:

- Element is not tracked when it is unchanged.

- Element is tracked when its status is changed.

- Element is tracked when it expires.

- Element is tracked when it is deleted.

- Element is tracked when one or more of its attributes are changed.

- Element is tracked when one or more of its fields are changed.

Notice how the test names are descriptive yet terse. Read together, they give you a sense of the application’s functionality. You may already (correctly) conclude that this app “tracks an element” when it is modified or changed in specific ways.

Integration Tests #

Integration tests test the interoperability between your app and third-party software or services. Think of integrations with other apps (within the same system) and external APIs.

Integration tests are where mocking dependencies becomes useful. Mocking an API endpoint, for example, makes it much easier to test than actually sending requests to it, although doing so may be sometimes desirable.

Examples of integration tests include:

- A webhook event with a specific source triggers a refresh.

- A webhook event with a specific element does not trigger a refresh.

Integration tests are less intuitive to read, as they tend to be specific to the context of the software with which they integrate.

Interface Tests #

Interface tests test the user experience (UX) of the application via one or more of its interfaces. This can include a graphical user interface (GUI), a command-line interface (CLI) and an application programming interface (API).

Interface tests are high-level tests that can simulate browsers, consoles and HTTP requests.

Examples of interface tests include:

- User is redirected to the login page when accessing the dashboard as an unauthenticated user.

- User sees a chart when accessing the dashboard as an authenticated user.

Interface tests tend to be easy to read (and write) due to their high-level nature and intuitive test cases.

End-to-End Tests #

End-to-end tests test the goals (business or otherwise) of the application. These can include detecting (possibly temporary) issues in broader parts of the system (and possibly providing fallback behaviour), demonstrating different behaviour for different people, handling pain points and providing business metrics.

End-to-End tests can be difficult to automate and may require external testing systems in addition to human input and moderation.

Examples of end-to-end tests include:

- The chart on the dashboard provides accurate and valuable insights to the user.

- The data provided via the API justifies the price tiers of the service we offer.

Tests as documentation #

When we categorise tests as above and give them descriptive names, we end up with “internal documentation” that not only describes, but also verifies, the behaviour of our application. This can be invaluable, especially in complex systems and in open-source software, where bug reports can immediately be checked against a test specification.

I’ve started generating test specifications for plugins. This test specification is a living document that grows over time and is automatically re-generated each time the tests run.

Here is the test specification for the Blitz plugin, I encourage you to take a peak.

Visualised, it looks as follows.

Testing in practice #

How you implement your tests will depend on what code testing framework you use and what types of automated tests it makes available. Part of my “awakening” happened when I moved from Codeception to the excellent Pest PHP. While that transition significantly improved the experience of writing tests, it was not essential to the process.

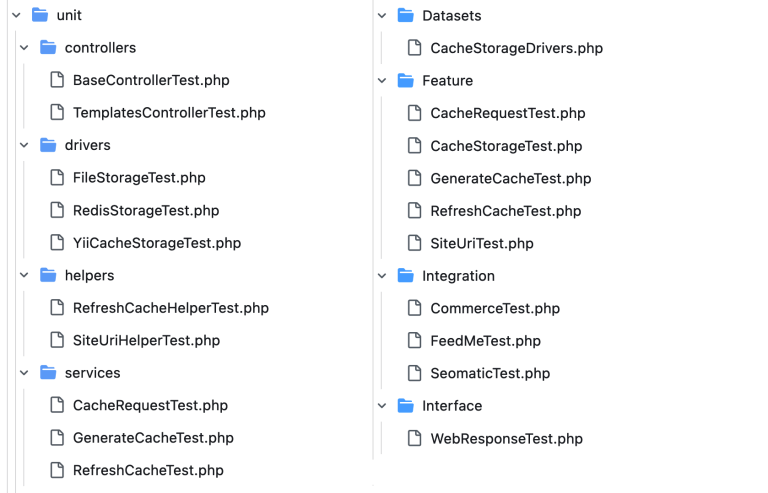

Here are the Blitz test files and structure before (on the left) and after (on the right) applying the new testing methodology.

Notice how instead of tests mirroring the source code structure, tests now fall into one of the four categories above and cover all test cases related to each feature/integration/interface using a single file.

Before, integration and interface tests were lumped together with other tests to mirror the source code. Now, they exist as their own test files, each with their own purpose.

Also, the cache storage drivers were each tested individually beforehand, even though they were all essentially testing the same thing. This was replaced by a single CacheStorageTest test file and a CacheStorageDrivers dataset file that dispenses each of the drivers to the tests.

Even with the new testing approach, all 110 tests are executed in just 4 seconds!

I will leave the details of how I’ve started implementing tests for another time, but suffice it to say that the code testing framework you use is less relevant than how you think about and achieve the goals of testing.

Revelation #

So far, it might sound like the revelation has been just a shift in perspective. But it goes much deeper. It is an entire overhaul in how tests are considered, structured, written and evaluated.

Before the revelation, I was writing unit tests – one test file per class and one or more tests per method in that class – with the goal of covering as many methods as possible. This leads to a test suite that tests the application’s implementation and strives for code coverage.

Since the revelation, I have been writing individual feature/integration/interface test files as defined above, each with as many tests as necessary. I have no use for the concept of a “unit” test nor the distinction between unit and functional tests (which is still unclear to me). Instead, the goal of my tests is to cover as many features as possible. This leads to a test suite that tests the application’s functionality and strives for feature coverage.

It may seem like a subtle difference, but writing tests that encourage quality assurance of features versus tests that increase code coverage feels like a world of a difference. And yet it took me 20 years to make the distinction.

I don’t know that I’m doing it right, but I can confidently say that I’m doing it less wrong!

And regardless of what “someone on the Internet” might say, I feel much closer to cracking how to build release confidence and reliability into my software applications.

Broader testing applications #

The great thing about this testing methodology, and another reason I believe it holds up well, is that it can be applied to any system (not just software). Let’s take a (non-self-driving) car as a trivial example.

Feature Tests:

- Accelerates when the accelerator pedal is applied.

- Slows down when the brake pedal is applied.

- Warms the driver seat when the switch is at the “on” position.

Integration Tests:

- Plays audio through the speakers when connected to a music app.

- Announces turn-by-turn directions when navigating to a destination.

Interface Tests:

- The seat warmer switch can easily be turned on or off while driving.

- The navigation app is always visible and audible while driving.

End-to-End Tests:

- The driver seat is heated to a comfortable temperature.

- The volume of audio/music is sufficiently turned down when turn-by-turn directions are announced.

Just by reading these tests, it becomes clear that this contraption’s features include speeding up, slowing down and keeping the driver’s bum warm.

It can play music and provide turn-by-turn directions using third-party apps. The seat warmer and navigation app can be safely used while driving. And finally, the driver’s bum will never get too hot, nor will they miss their turn due to the music being too loud.

I’m confident you can apply the same testing methodology to any household item: a chair, a vacuum cleaner, or a food processor. I’ve actually found it to be a fun little exercise!